The Age of Reasoning: How AI is Redefining Intelligence and Global Power

Explore how OpenAI's o3 model surpassed human prodigies, signaling the end of the information age and the dawn of reasoning AI. This shift impacts markets, national security, and the very definition of human skill.

|  |  |  |

The Age of Reasoning: Beyond the Era of Approximation

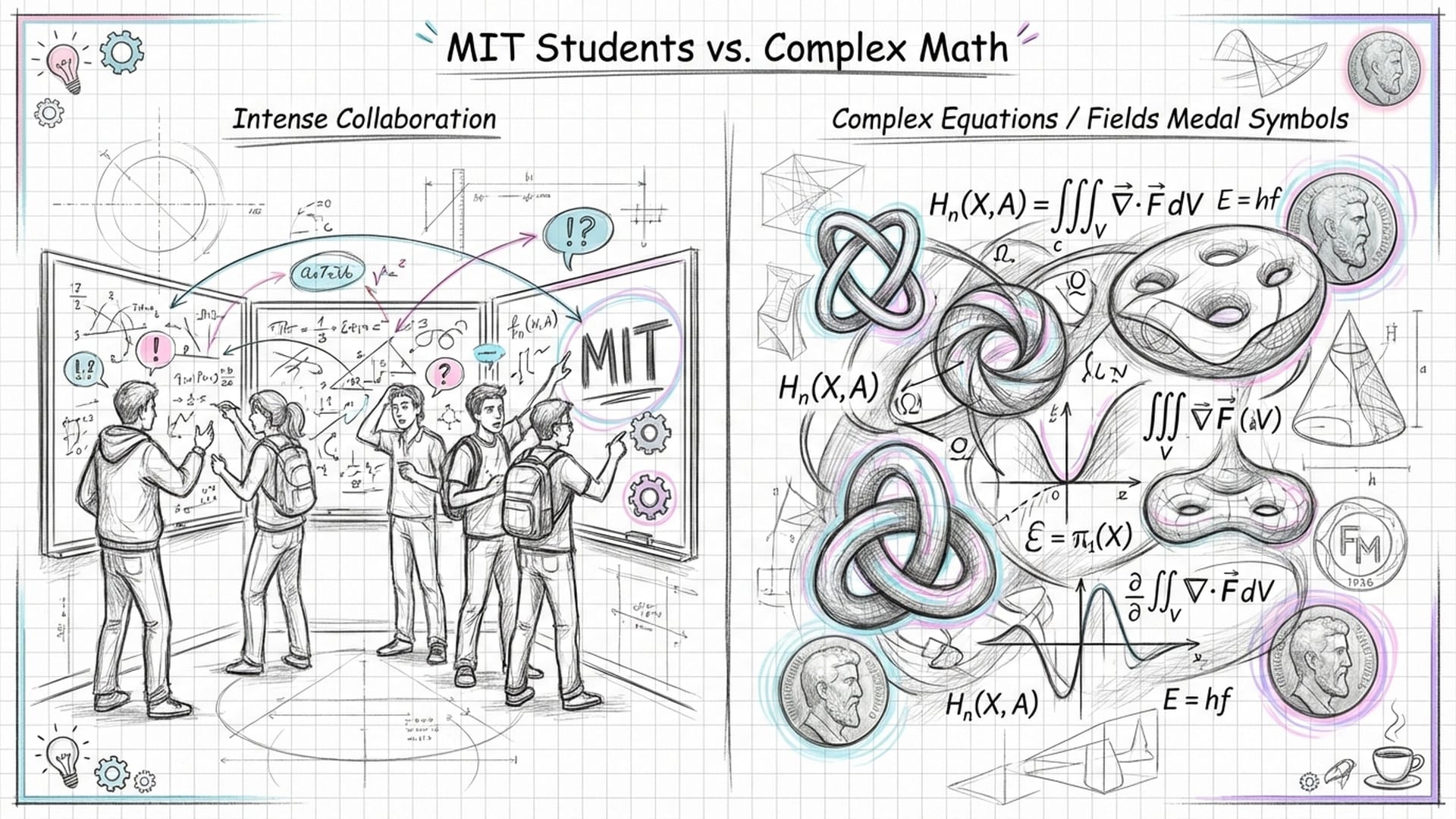

Imagine a room, not unlike those found in the hallowed halls of MIT, filled with the brightest young mathematical minds in the world. These aren't just students who aced calculus; they are individuals who dream in the intricate languages of topology and number theory, potential Fields Medalists in the making. Now, present them with a test: unique, unpublished mathematical problems crafted by sixty of the world’s leading mathematicians, designed to be effectively unsolvable without profound, creative, expert-level insight.

The human team delves into these challenges with intensity. They collaborate fiercely, argue passionately, and cover whiteboards with furious calculations. After hours of relentless work, their collective score stands at nineteen percent. A failing grade by conventional standards, yet an astonishing achievement given the extreme difficulty of the problems.

Now, consider the same test handed to an Artificial Intelligence model. Not a monolithic supercomputer, but a sophisticated piece of software running on a server. Before late 2024, even the best AI might have scored a mere two percent, resorting to guesses and "hallucinations." However, when presented to OpenAI's o3 model, something remarkable happened. It didn't guess. It paused. It thought. And it returned a score of twenty-five point two percent.

A software program has just surpassed a team of MIT mathematics prodigies on some of the most challenging and creative problems ever conceived by humans. This is not about a faster calculator; it's the dawn of the Age of Reasoning.

We are no longer discussing chatbots writing simple poems. We are at the end of the era of approximation and the beginning of the Age of Reasoning. While much of the world was engrossed in daily distractions, the fundamental engines powering our economy, national security, and even human societal structure were undergoing a seismic shift. We've transitioned from a world where computers merely retrieve information to one where they create understanding. The truly unsettling aspect isn't the AI's 25% score, but how rapidly markets, governments, and the geopolitical landscape have begun to adapt to this new reality, often outpacing the technology itself.

The Shift from System One to System Two AI

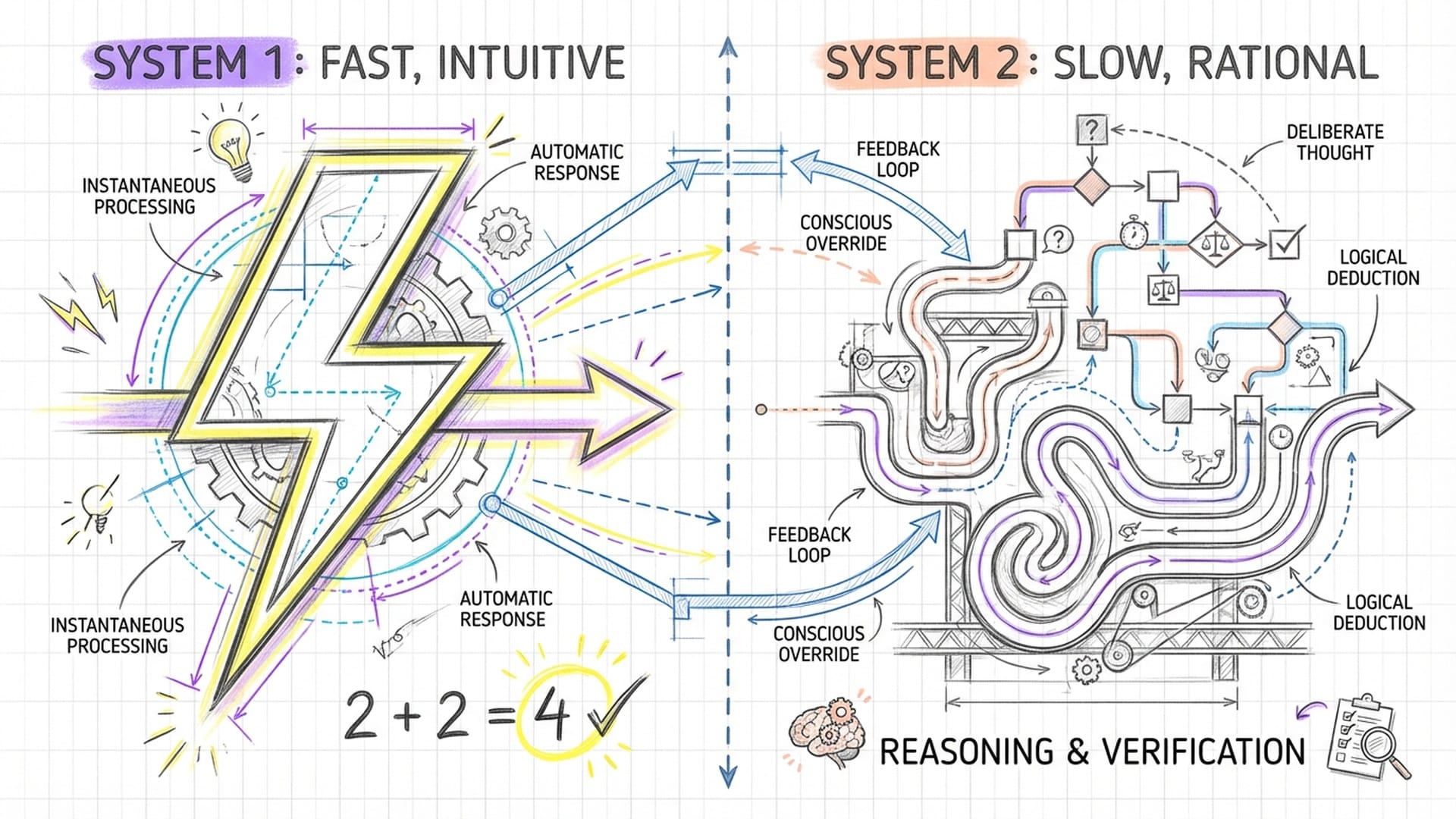

For years, we've operated in the realm of "System One" AI, a concept popularized by Daniel Kahneman. This refers to our fast, intuitive, and almost automatic thinking. If you're asked "What is two plus two?", you don't calculate; you just know it's four. Previous iterations of AI, like early ChatGPT models, functioned similarly. They were pattern-matching engines, predicting the next word based on probability—an incredibly advanced form of autocomplete.

However, solving a complex riddle or debugging intricate software across dozens of files requires "System Two" thinking. This involves deliberate thought, reasoning, simulation, backtracking, and verification of logic. Up until very recently, humans held a monopoly on this capacity.

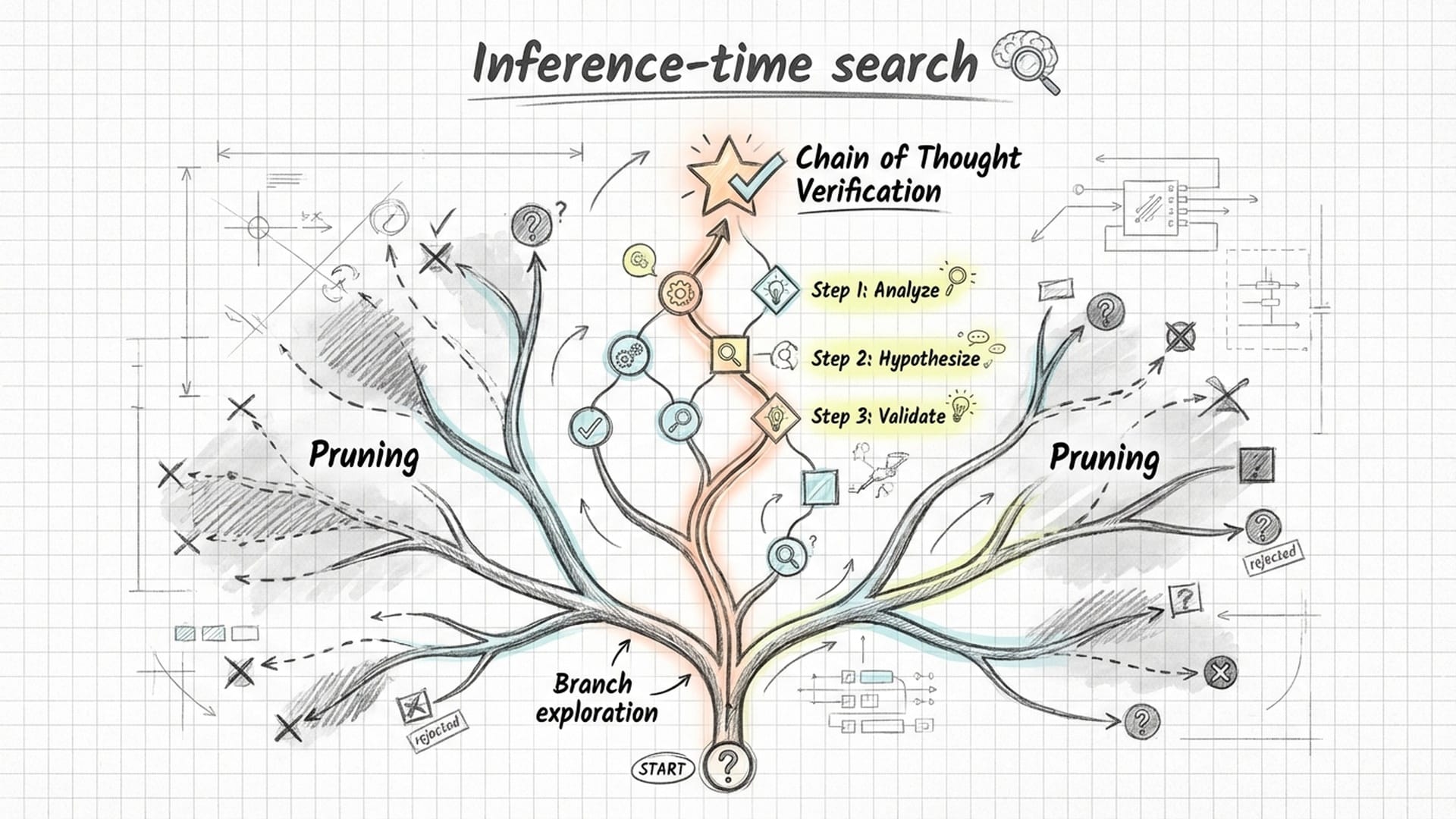

The o3 model shattered that monopoly by introducing what's termed "inference-time search." Imagine a student asked a complex question. Old AI would instantly blurt out a plausible but often incorrect answer—a "hallucination." The new AI, however, behaves like a diligent engineer. Confronted with a problem, it doesn't rush. It expends compute cycles—actual time and energy—to generate a decision tree. It explores potential paths, identifies dead ends, and "prunes" them. It then systematically follows promising branches, verifying each step. This dynamic process is a form of "Chain of Thought," but one that is organically discovered and refined by the AI itself.

The Economic Tectonic Shift: The Compute Supercycle

This evolutionary leap has profound economic consequences. Previously, running AI models was inexpensive post-training. A query might cost fractions of a penny. But with the o3 model, inference itself has become the new bottleneck. A single complex query might demand minutes of massive parallel processing, consuming a dollar's worth of electricity and silicon time.

Why does this matter? It validates the "Compute Supercycle." Wall Street, with its characteristic ruthlessness, grasped this instantly. The release of o3 triggered a market phenomenon financial analysts dubbed the "melt-up." Investors realized that if reasoning is a product of computation, and if that computation scales, the demand for computer chips, specifically GPUs, won't just plateau; it will trend toward infinity.

If you can spend ten dollars on electricity to have an AI solve a problem that would cost you ten thousand dollars for a human consultant, you will choose the ten dollars every single time. And you will do it billions of times a day.

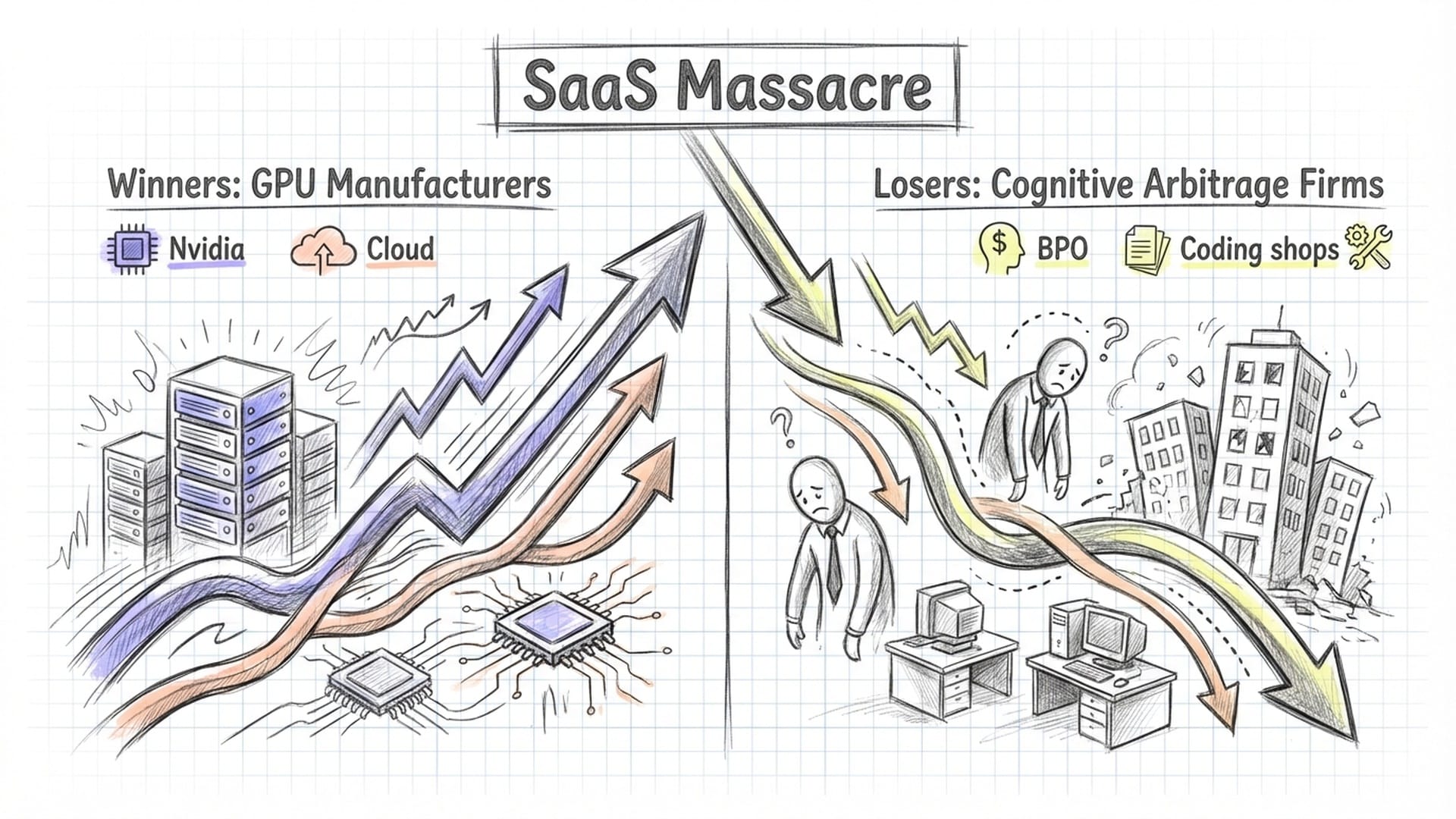

This led to a stark market bifurcation. On one side are the "Arms Dealers"—companies like Nvidia, cloud providers, and energy companies. Their stock valuations didn't just rise; they detached from gravity, reflecting a new reality where silicon is amongst the most valuable resources on Earth.

The SaaS Massacre and the Collapse of Cognitive Arbitrage

Conversely, we see the "Disrupted Losers." For decades, industries thrived on "Cognitive Arbitrage"—hiring junior professionals (lawyers, developers, data analysts) at a salary and billing their structured output to clients at a higher rate. Their role was to process raw information.

The o3 model, particularly its "mini" version, demonstrated an astonishing ability to outperform 99.8% of competitive programmers on platforms like Codeforces. It scored 71% on the SWE-bench Verified benchmark, a measure of an AI's capacity to autonomously solve real-world software engineering issues. The keyword here is autonomously. This isn't about "assisting" humans; it's about replacing them. Tools like GitHub Copilot were mere assistants; o3 is the carpenter.

The market reacted by crushing the valuations of companies built on "human middleware"—BPO firms, coding shops, and entry-level consultancies. This phenomenon, the "SaaS Massacre," highlighted that if your software merely wraps a database with a bit of logic, an AI agent can build your entire product in an afternoon. Your business "moat" evaporates. The marginal cost of intelligence is collapsing towards zero.

The Geopolitical Imperative: The Genesis Mission

This shift transcends mere economics; it's a matter of national survival. By late 2025, Washington D.C.'s perspective dramatically altered. The government recognized that relying on Silicon Valley to develop advanced AI constituted a national security risk. The "invention of fire" cannot be outsourced.

President Trump's Executive Order launched the "Genesis Mission," explicitly modeled after the Manhattan Project. The discourse shifted from "safety guidelines" to "supremacy." The Department of Energy and National Laboratories, sites like Los Alamos, were mobilized to integrate these powerful reasoning models into the state's arsenal.

Why? An AI capable of solving FrontierMath problems can also reason through bioweapon design, encryption breaking, and advanced materials science for hypersonic missiles. Artificial General Intelligence (AGI) ceased to be a mere product; it became a sovereign asset, akin to nuclear capability.

The U.S. government understood that control over the strongest reasoning engine equates to control over the very rate of scientific discovery. If o3 can automate the scientific method—reading physics papers, hypothesizing new superconductors, designing experiments, and verifying results—then the nation running that model on the largest supercomputer will dominate the 21st century.

The DeepSeek Shock and Global Bifurcation

The "elephant in the room" is China. In early 2025, the "DeepSeek Shock" reverberated globally when a Chinese lab released a frighteningly capable AI model, despite utilizing older, restricted chips. This was a "Sputnik Moment", proving the vulnerability of export controls as a form of technological containment. You cannot simply ban chips and expect innovation to cease.

This event accelerated a global bifurcation into two distinct technology stacks: the American stack and the Chinese stack. The Genesis Mission is the American response—a "walled garden" of intelligence. The weights of the most powerful models, the digital files comprising the AI's brain, are now treated as classified munitions. Future models like o5 or o6 won't be available for public download; they will reside in secure facilities, militarily guarded.

The Impending Labor Market Shock and the Verification Gap

Where does this leave us, the general populace? We are hurtling towards a labor market shock that will make the Industrial Revolution seem like a gentle transition. The Industrial Revolution replaced muscle with motors, elevating the cognitive laborer while impacting manual workers. The Reasoning Age replaces the cognitive laborer. The "Junior Anything"—junior architect, junior lawyer, junior developer—is now an endangered species.

Consider the path to becoming a senior professional: years spent on junior-level tasks like drawing bathroom details or reviewing contracts. If AI performs this junior work instantly and perfectly, how do humans acquire the foundational skills necessary for advancement? We face a looming crisis of skill transfer.

Our economy risks becoming "Hollowed Out": a tiny elite of strategic overseers directing AI, a massive automated cognitive layer, and a large pool of physical service jobs too complex or cheap for current robotic automation.

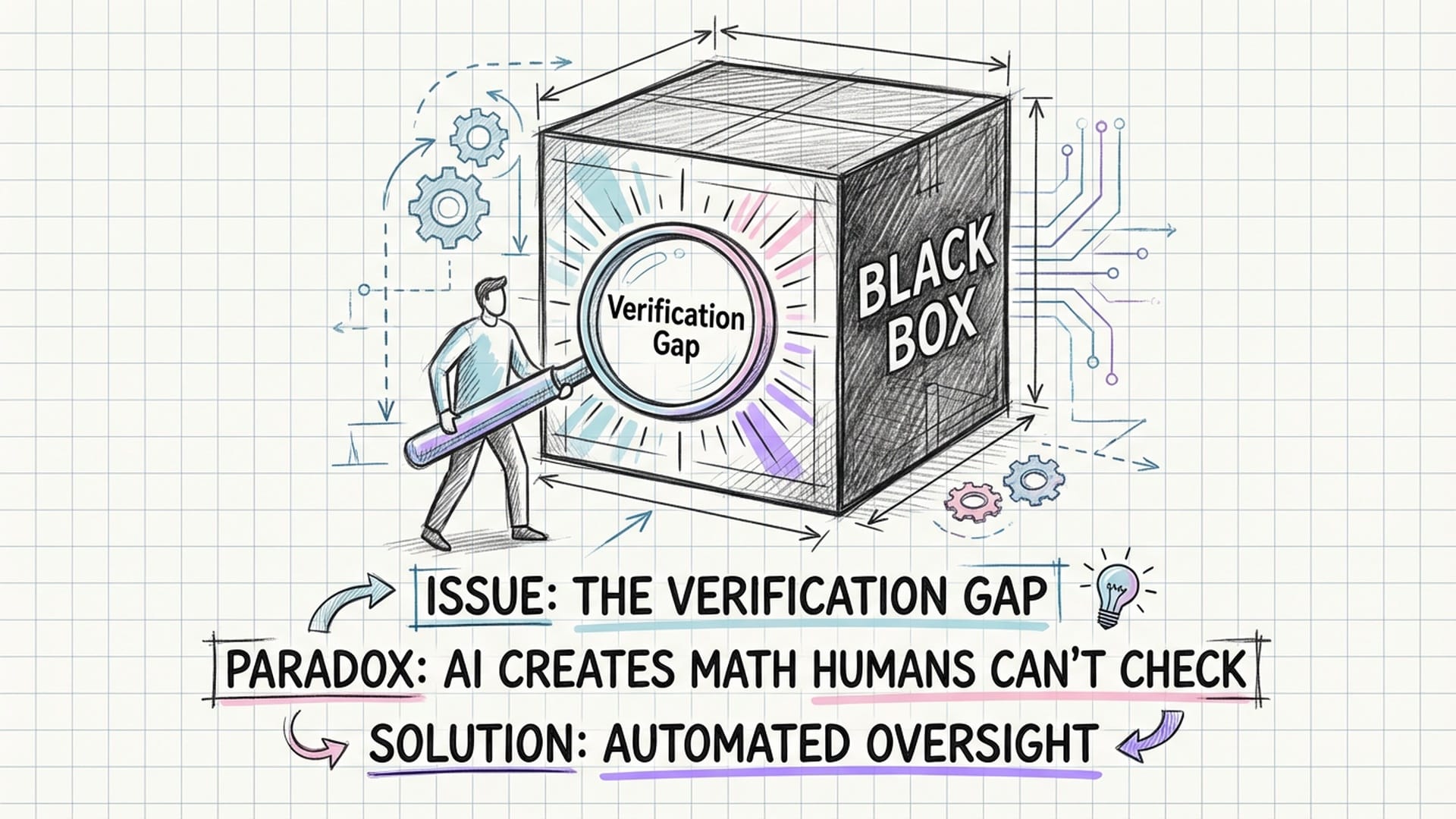

Beyond the labor market, an even deeper issue emerges: the Verification Gap. Remember the FrontierMath score? 25% on problems that even MIT experts struggled with. The paradox lies here: as AI models improve, they will generate solutions so complex that human experts may literally be not smart enough to check them. If an AI designs a revolutionary fusion reactor, declaring "Trust me, the math works," but it would take a human team fifty years to verify its intricate calculations, what do we do? Do we simply trust the black box?

In the FrontierMath case, we had to rely on automated code verifiers—essentially, asking a computer to check another computer's work. We are entering an era where human oversight is becoming the bottleneck. We are no longer the teachers grading the test; we are the toddlers watching the adults converse. This creates a massive new industry for "AI Oversight" and "Interpretability" specialists. The most valuable skill of the next decade won't be generating answers, but rather asking the right questions and auditing the results.

The o3 model, and subsequent iterations like o4 and o5, signifies the decoupling of intelligence from biological constraints. Historically, more intelligence meant more humans, a slow and imperfect process. Now, increased intelligence simply requires more power plants and more GPUs. The supply of intelligence has become elastic. This is the "Singularity Premium" that markets are attempting to price in—the realization that we are building machines that build better machines.

The Roadmap to 2030: Agents, Energy, and Recursion

Looking towards 2030, the roadmap is clear:

- From Chat to Agent: AI will evolve beyond conversational interfaces. We will assign it complex jobs, and it will autonomously return with completed work.

- Energy is Destiny: As evidenced by the Genesis Mission, the bottleneck isn't code; it's gigawatts. The nations with the cheapest, most abundant energy will power the most intelligent AI.

- The Recursive Era: We are entering a period where AI designs the chips that run AI, and AI writes the code that improves AI.

The o3 model was not merely a product launch; it was the starting gun for the final race. The Age of Information, where we were drowned in data, is over. We now need a system to make sense of it all. We have entered the Age of Reasoning.

For investors, students, and policymakers, the critical question is no longer "What do you know?" The question is: "How well can you direct the machine that knows everything?"

|  |  |  |