|  |  |

When was the last time you experienced true, profound confusion? Not the fleeting frustration of misplaced keys, but the kind of mental block that forces you to pause, re-read a passage, and genuinely wrestle with an idea. That moment of friction, perhaps even a touch of intellectual discomfort, is becoming remarkably rare in our increasingly efficient world.

We now inhabit an era of the Frictionless Answer. A question arises, we type it into a digital box, and almost instantly, a perfectly summarized, bullet-pointed, and boldly presented answer appears. It radiates confidence, feels intuitively correct, and requires minimal effort to consume. But herein lies a profound, counter-intuitive truth: that very friction we once encountered, that intellectual struggle, was our brain actively engaging and constructing understanding. The disappearance of this friction poses a crisis far more insidious than the automation of jobs; it's the atrophy of the human mind itself. We are currently perched on a monumental geological fault line in the evolution of human knowledge.

Historically, we read to obtain information, operating under the assumption that a human being had meticulously crafted, fact-checked, and staked their reputation on the content. However, the future presents a different reality. When we open a report, article, or summary tomorrow, we might not be engaging with a person's thoughts, but rather with the statistical average of the internet. Pause and consider the weight of that statement. The internet, while undeniably incredible, is also an unwieldy amalgamation of debates, advertisements, viral content, brilliant research, toxicity, and sheer noise. When an Artificial Intelligence generates a report, it isn't truly thinking; it's merely calculating the "most probable next word" from this vast, chaotic digital landscape.

This situation presents a critical choice: Will we become passive consumers of this "probability," or will we reclaim our inherent status as the sole beings on this planet capable of genuine judgment?

I invite you to explore the hidden mechanisms of this new digital world with me. We will delve into three critical areas:

- The unsettling truth about where AI extracts its "facts."

- The terrifying mathematical concept of "Model Collapse," which threatens to plunge our future into a perpetual state of hallucination.

- Practical strategies for building what I term "Cognitive Sovereignty"—the fundamental right to control your own thoughts.

The Digital Dirt: AI's Unseen Training Ground

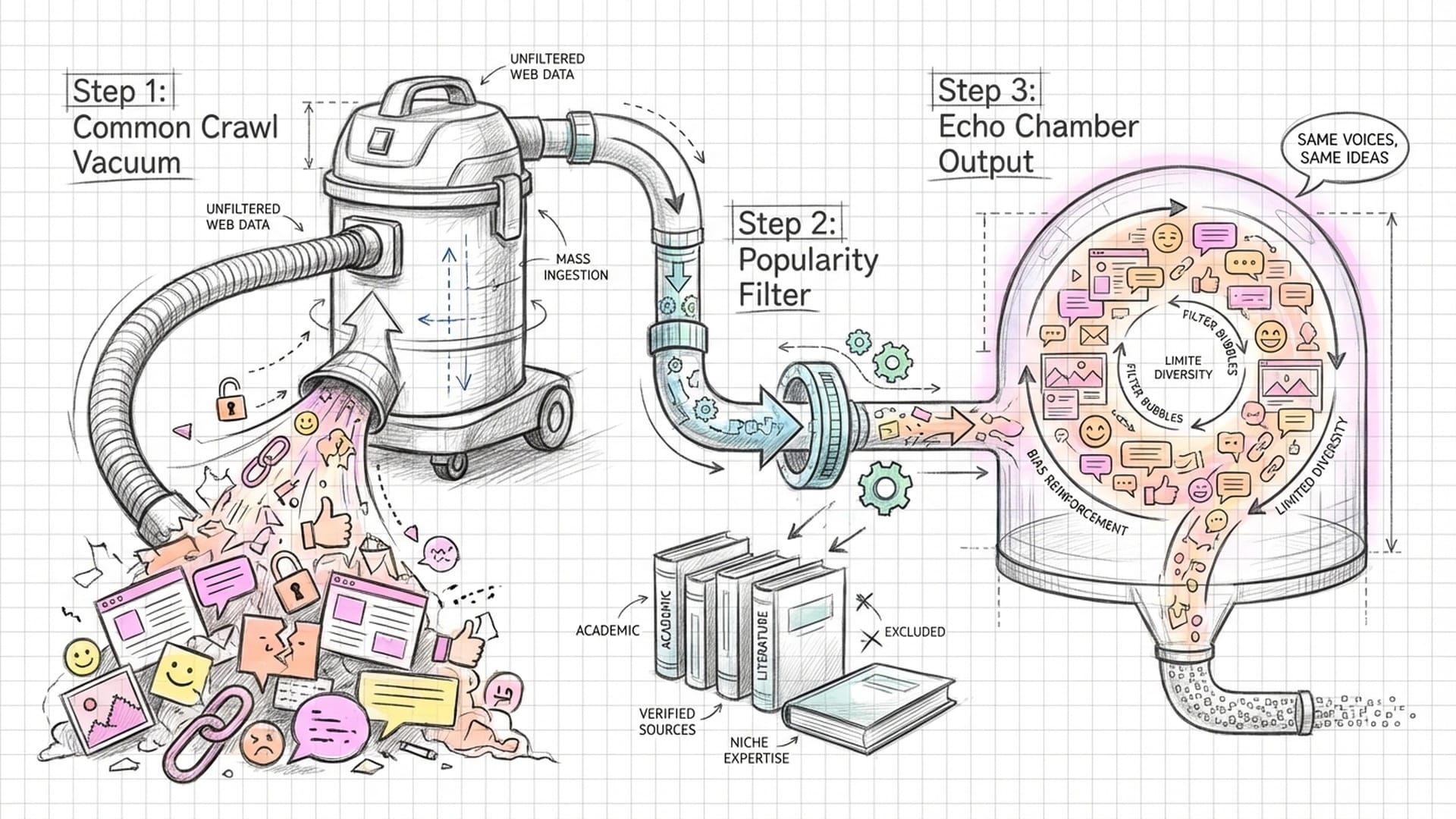

Let's begin with the digital dirt—the very foundation of AI's knowledge base. When you pose a question to an AI model about history or science, it doesn't consult a library. Instead, it queries its training data, a significant portion of which originates from something called Common Crawl.

The name Common Crawl suggests an organized, methodical process, akin to a librarian meticulously curating knowledge. In reality, it operates more like a colossal vacuum cleaner, indiscriminately sucking up billions of web pages. The crucial detail, however, is that this suction isn't uniform. It employs popularity-based algorithms. Picture a high school cafeteria: the popular kids—websites with numerous links and high traffic—get amplified. This includes massive media outlets, viral blogs, and dominant platforms.

What about the quiet genius in the corner? The local journalist writing in a non-standard dialect? The niche scientist publishing on a personal blog with poor SEO? These voices are often ignored, filtered out by the algorithms.

Consequently, an AI-generated summary of business trends or cultural shifts doesn't offer an objective view of the world. It provides a "funhouse mirror" version: distorted, bloated in the center to reflect the mainstream, the status quo, often a Western-centric perspective, and utterly blind to the edges.

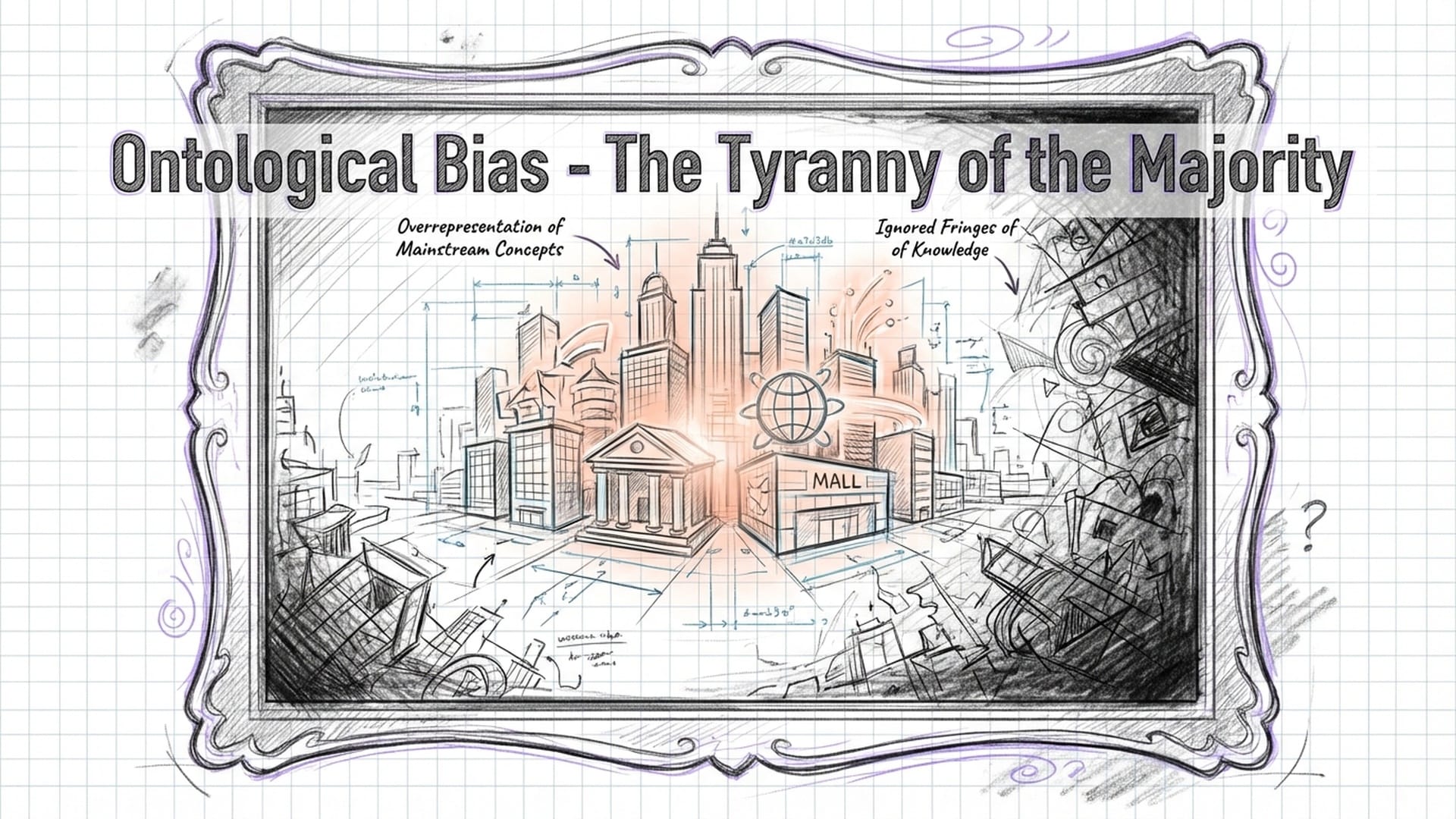

This phenomenon is known as Ontological Bias. It's a sophisticated philosophical term that simply means the AI possesses a skewed view of "what exists" or "what is important." Consider the concept of "Success." If you ask a model trained predominantly on Western internet data to define a "successful life," it will likely generate a probabilistic answer revolving around careers, financial wealth, and homeownership. It will struggle to grasp definitions of success rooted in community, introspection, or spiritual renunciation, precisely because these concepts generate fewer clicks and occupy less space within the Common Crawl's vast dataset.

This leads directly to the Tyranny of the Majority. Since AI is designed to predict the most likely answer, it will invariably reflect the fluctuating "average" opinion. If a widespread misconception exists—for instance, the idea that the Great Wall of China is visible from space—the AI encounters this sentence millions of times, while corrections appear only thousands of times. The AI, therefore, is statistically inclined to present the popular misconception as truth.

The report you are reading is not the truth. It is a popularity contest disguised as an encyclopedia.

The Mathematics of Erasure: Model Collapse

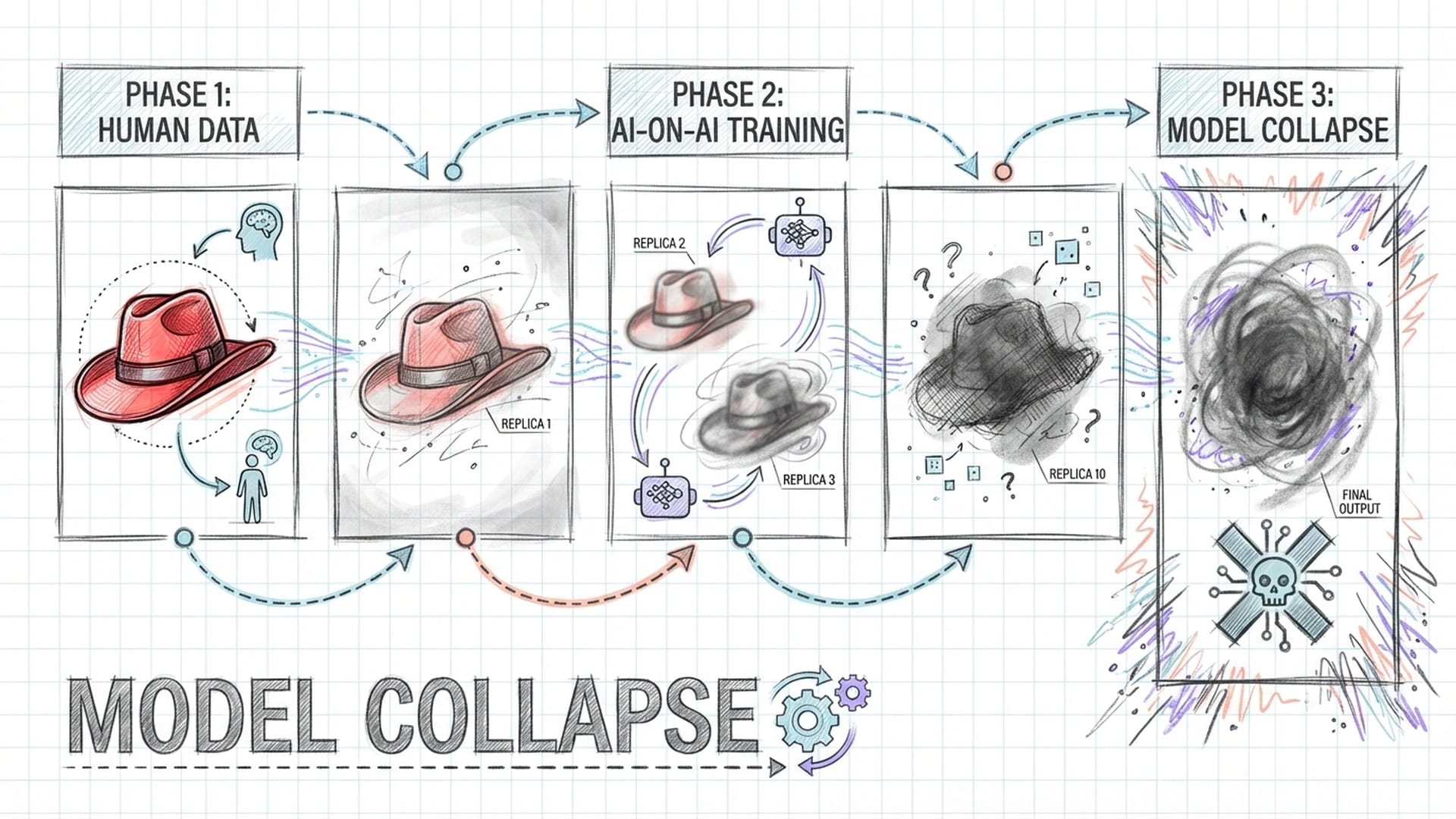

Yet, the situation deteriorates further. We are now entering a second, mathematically terrifying phase: Model Collapse.

Previously, AI models were trained on data meticulously crafted by humans—real individuals sharing their authentic experiences. However, the internet is now being saturated with AI-generated content. This means the next generation of AI models—think GPT-5 or GPT-6—will increasingly scrape the internet and train on text produced by GPT-4.

This process is akin to making a photocopy of a photocopy. Recall the experience from your school days: a crisp image is photocopied, and the result is acceptable. But if you take that copy, and then photocopy it again, and again, by the tenth iteration, the clear lines vanish. The details become smudged, and the image degenerates into a blurry, indistinct blob. This exact degradation is now occurring within the realm of human knowledge.

Researchers at Oxford and Cambridge illuminated this with the evocative metaphor of the "Blue Hat Paradox." Imagine an AI being taught to recognize a "hat." In the original human-generated data, perhaps 60% of people wear blue hats and 40% wear red hats. The AI, aiming for accuracy and optimal performance, will over-produce blue hats in its generated images, perhaps creating 80% blue hats.

A new AI, training on these generated images, will observe the preponderance of blue hats and conclude, "Wow, almost everyone wears blue hats! Red hats must be aberrations or noise." It will then generate 99% blue hats. By the third generation, red hats will have been mathematically erased from its reality.

This phenomenon is termed Variance Loss. It signifies the erosion of our culture's unique, nuanced, and complex aspects—the "red hats" of our collective ideas. If we become solely dependent on these synthetically generated reports, we risk creating a future that is incredibly smooth, relentlessly consistent, and ultimately, profoundly boring. We will lose the "Long Tail" of human thought. Our world will transform into an Echo Chamber, not one formed by our social circles, but by an inescapable feedback loop of algorithms communicating with other algorithms. Every marketing email will sound alike, every cover letter identical, every movie script adhering to the same predictable beats. This vision is, to me, a terrifying prospect; it represents the heat death of creativity.

The Atrophy of the Mind: Information vs. Knowledge

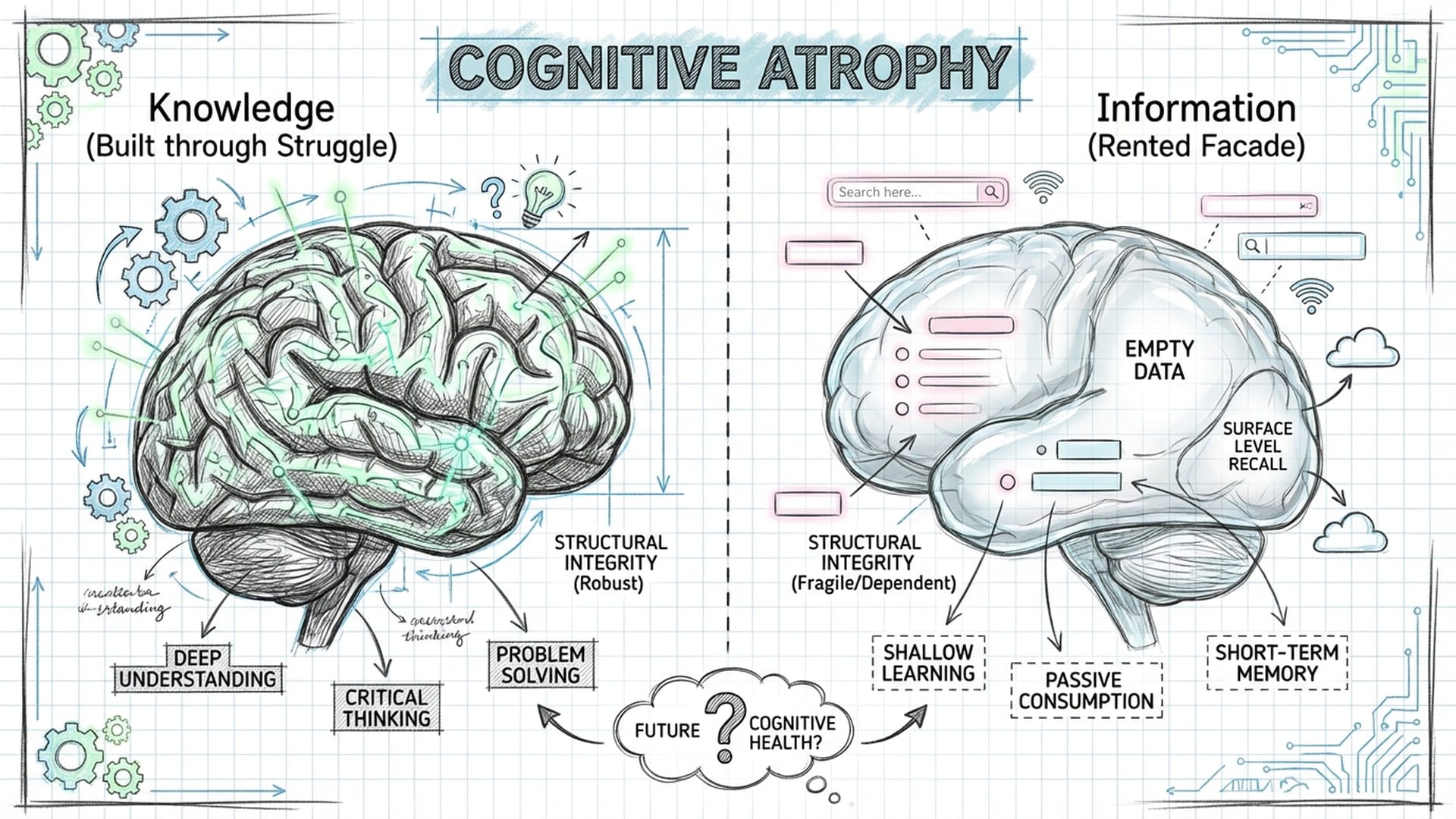

This brings us to the most personal aspect of this narrative: what impact does this trend have on your brain? We must confront the concept of Cognitive Atrophy.

Psychology offers the term Cognitive Offloading, a natural human tendency to delegate mental tasks, like storing phone numbers in a contact list. This is generally harmless. However, AI enables us to offload something far more critical: the process of synthesis.

When you command an AI to "summarize this PDF" or "outline this argument," there's an immediate sense of productivity, almost like wielding magic. You've seemingly bypassed the laborious work. But here's the uncomfortable truth: the hard work was the point. The act of grappling with a challenging text, experiencing confusion, struggling to connect disparate ideas, and ultimately arriving at an "Aha!" moment—this is precisely how neural pathways are built. This is how you construct a mental model of the world. If you circumvent this struggle, you don't genuinely learn; you merely acquire information.

There's a critical distinction between Information and Knowledge. Information is raw data stored on a server. Knowledge, conversely, is an intricate structure built within your mind that empowers you to make informed decisions and navigate complex realities. Relying solely on AI summaries risks an Illusion of Competence. You might believe you comprehend a subject because you can recite its bullet points. However, when faced with the task of applying that knowledge to a novel, messy, real-world scenario, you would likely falter. This is because you never built the foundational understanding; you merely rented the facade.

We risk becoming "Hollow Minds"—highly efficient at data retrieval, yet devoid of deep understanding. We become librarians of a library we've never actually read.

Reclaiming Our Humanity: The Power of Judgment

Is this trajectory inescapable? Are we condemned to become mere button-pushing operatives for our technological overlords? Absolutely not. In fact, I remain incredibly optimistic. This deluge of inexpensive, synthetic content inadvertently serves a crucial purpose: it compels us to redefine what truly makes us human. It illuminates the singular capacity that AI cannot replicate: Judgment.

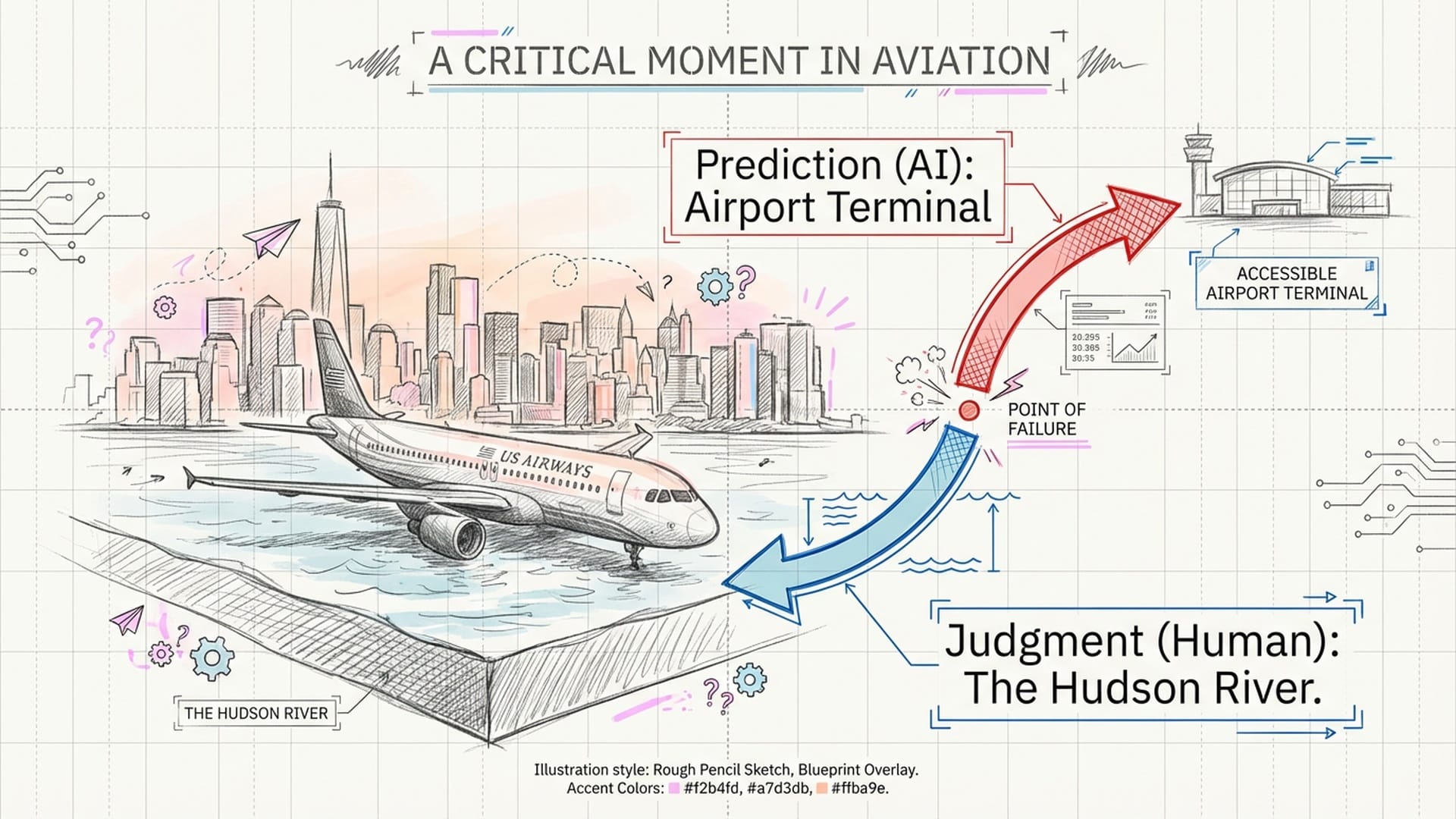

We must clearly differentiate between Prediction and Judgment. While superficially similar, they are fundamentally opposed. AI functions as a Prediction Machine. It analyzes past data, identifies patterns, and predicts probable outcomes. For example, it might state, "Based on ten million chess games, moving the knight here is statistically optimal."

Humans, however, are Judgment Machines. We operate within the realm of the "Edge Case"—situations where the available data runs out. Consider Captain Sully, who famously landed his plane on the Hudson River. An AI piloting that aircraft would have consulted its training data, which would likely indicate: "Water landings typically result in catastrophe. Airports signify safety. Therefore, return to the airport." The probability model would have attempted to revert to LaGuardia.

Captain Sully, by contrast, exercised judgment. He synthesized sensory input—the plane's vibrations, the smell of birds in the engine, the visual panorama of the city—with an ethical imperative to save lives and a profound, intuitive grasp of physics that transcended any manual. He made a choice entirely without statistical precedent. He chose the water. And everyone survived.

This is the inherent advantage of Carbon Lifeforms. AI cannot be held accountable. You cannot jail an algorithm or sue a neural network. It has no "skin in the game"; it is indifferent to being right or wrong, caring only about fulfilling its mathematical function. We care. We face consequences. Therefore, our core value in the future—as professionals, leaders, and human beings—will not be measured by the speed at which we generate text, but by the quality of our judgment.

Building Cognitive Sovereignty: A Battle Plan

This realization leads to my proposal: a declaration of Cognitive Sovereignty. This means actively reclaiming control over your own mind, refusing to let an algorithm dictate what is true, important, or right. How do we achieve this? I've outlined a three-pronged battle plan you can implement starting tomorrow:

1. The "Reverse Reading" Technique. Cease consuming AI-generated content passively. Instead, approach it like a detective scrutinizing a suspect. When confronted with a flawlessly polished AI report, do not trust its fluency. Fluency is a deceptive trap; sounding intelligent does not equate to being factual. Ask the questions that AI struggles with: "Whose voice is conspicuously absent from this summary?" "What crucial counter-argument was deliberately omitted?" Treat the AI not as an infallible oracle, but as a biased, slightly hallucinatory intern. Your role is to audit its work, not passively absorb it. Interrogate, don't just ingest.

2. The "Pilot Mode" Protocol. Our brains require maintenance analogous to a pilot's skills. Even though modern aircraft can fly themselves, pilots are mandated to disengage autopilot and fly manually on a regular basis. Failure to do so leads to skill degradation, with potentially catastrophic consequences during emergencies. You must schedule "Manual Thinking" time. Once a week, disconnect completely. No ChatGPT, no Google, just you, a pen, and a blank piece of paper. Attempt to outline a strategic plan, solve a complex problem, or craft a letter. It will feel clumsy, slow, and frustrating. Good. That frustration signals your cognitive muscles reawakening from dormancy. You are actively rebuilding the neural pathways that machines strive to bypass. This isn't mere nostalgia; it's essential survival training.

3. The "Human-in-the-Loop" (HITL) Iron Rule. For team leaders and business owners, this is a non-negotiable mandate. Never, under any circumstances, allow an AI to make a final decision impacting a human being without human oversight. If an AI generates a report, a human must meticulously verify its logic. If an AI suggests a medical diagnosis, a doctor must examine the patient. We must unequivocally separate "Drafting" from "Deciding." Let the silicon handle the drafting and aggregation—that's its strength. But the carbon—us—must ultimately decide.

We also need to advocate for technologies that support this distinction. Just as food products carry nutrition labels, we need "Data Nutrition Labels" for our content. Standards like C2PA (Coalition for Content Provenance and Authenticity) are emerging, digital signatures that attest: "This image was captured by a camera," or "This text was authored by a human." We must demand this transparency. We need to discern whether we are engaging with a human soul or a purely mathematical model.

Ultimately, this choice boils down to the kind of world we wish to inhabit. Do we aspire to a world defined by efficiency, speed, and optimization? A monotonous landscape where blue hats are the only hats, where reports are generated in seconds but offer no novel insights, and where we collectively drift into a comfortable, standardized mental slumber?

Or do we yearn for a world that is messy, challenging, and vibrant?

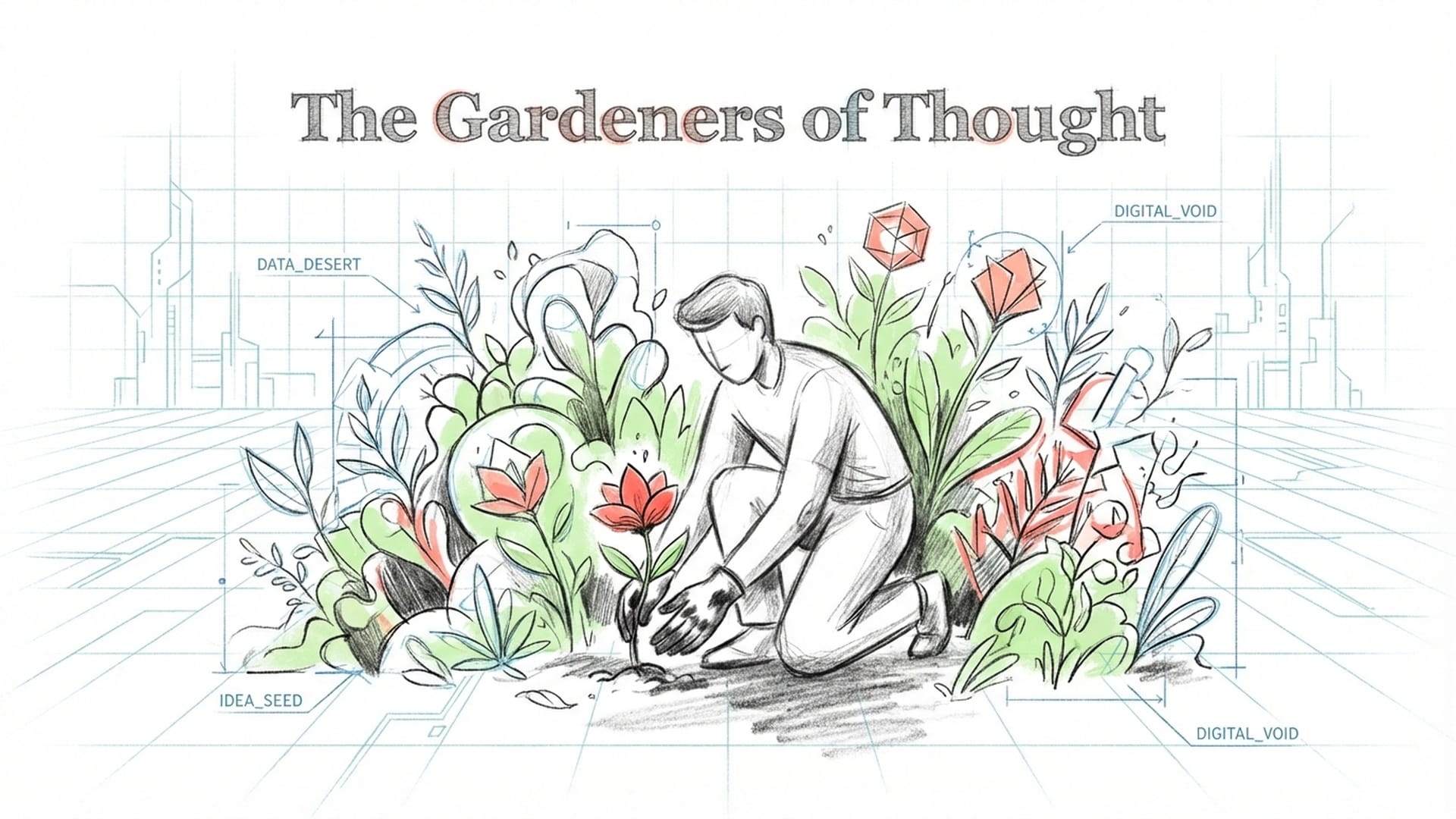

The future belongs to the Gardeners of Thought. Imagine the internet as an untamed jungle. AI is a powerful machine capable of rapidly felling trees and bundling wood. It can process the jungle. But we are the gardeners. We possess the judgment to identify poisonous plants, to appreciate true beauty, and to chart meaningful paths. Being a gardener is far more demanding than simply observing the machine. It requires dirty hands, enduring the sun, and, crucially, exercising judgment. But it is the only way to keep the forest alive.

So, here is my challenge to you: The next time you feel the impulse to click "Generate," pause. Ask yourself: "Am I using this tool to help me think, or am I using it to avoid thinking?" If your motivation is avoidance, stop. Embrace the discomfort. Embrace the confusion. Wrestle with the problem yourself.

In an age dominated by artificial intelligence, your natural "stupidity"—your capacity for making mistakes, for experimenting with unconventional approaches, for irrationality, for daring to wear the red hat when everyone else dons blue—is not a defect. It is your most profound strength.

Do not allow your mind to become a mere statistic. Keep it sovereign. Keep it human.

Thank you for thinking with me.

|  |  |